RESTing Around with PBCS

KScope Recap

G’day All,

So a lightning quick recap of KScope17.

Cameron celebrated VE day

Celvin met a deer

Opal had a sugar high

Gary drove a train

Ludovic ate some tacos

Natalie experienced Puppy Love

Jason went to the danger zone

Rodrigo grew a moustache

Ricardo held an axe

Jake Turrell ransacked the house of Pacman

Edward Roske’s Hat had a crisis of confidence

Tim G was English

And Newbie of the Year Neviana literally did everything

I’m not one to wax lyrical, but y’all are amazing. I’m honoured to call you my friends.

So – what’s next

So after an amazing week, returning from KScope17 I found myself full of verve and enthusiasm, motivation and endorphins

Actually, Becky is always this enthusiastic…bad example.

So I figured I’d hit the ground running and bang out multiple quick posts about the REST API in PBCS. Also gives me an excuse to make bad REST puns through the entire post.

Needless to say, 4 weeks, 1 SR, 2 OTN posts, hours trolling though the amazing John Goodwin’s blog backlog and some urgent twitter DM’s to the guru and I’m finally ready to post.

RESTating the background

So, I could write a whole background of what a REST API is and it’s benefit to developers – but I’ll leave this to the very talented Jake Turrell. This is his recorded session from KScope 16, where he discusses the anatomy of a REST API request, talks through JSON and does a live demo (because doing something completely crazy and unsupported in front of a live audience is ALWAYS a great idea!).

It was one of my favourite presentations from KScope16 so I cannot strongly recommend it enough!

Without further RESTraint

So now you know what the REST API can do, let’s throw some code at the wall and see what sticks.

I’m going to walk through a very simple (and oft repeated process!) of uploading a datafile to a PBCS instance and triggering a data load rule.

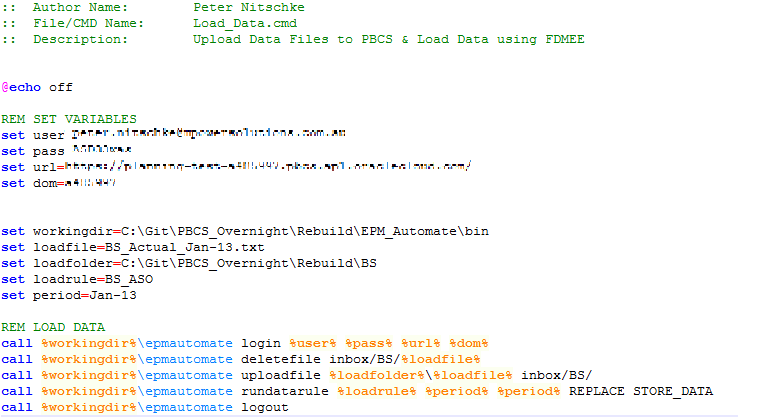

Firstly though, let’s see what the same process looks like in EPMAutomate.

The code lines are fairly simple – Oracle has exposed REST functions through very simple batch parameters

Steps are:

- Login

- Delete File

- Upload File

- Trigger data load

- Logout

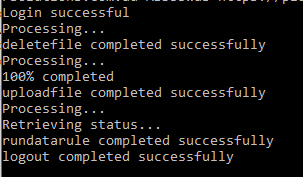

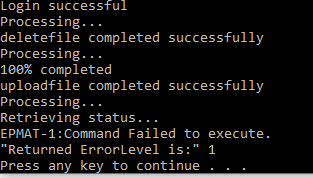

And this is what it looks like for a successful run:

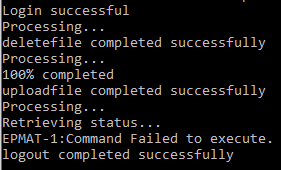

And this is what it looks like with a failed load – I’ve added a data record for an entity that doesn’t exist

UncleaREST logging ever…

So we know we ‘can’ do it through EPMAutomate, but that logging is pretty atrocious. For anyone wondering, the EPMAutomate command does return an Errorlevel, but it doesn’t return any real details and there is no way to easily identify and grab the log.

So let’s go to the REST API.

The four commands we are going to use are:

DELETE /interop/rest/{api_version}/applicationsnapshots/{applicationSnapshotName

POST /interop/rest/{api_version}/applicationsnapshots/{applicationSnapshotName}/contents? q={"isLast":true,"chunkSize":444,"isFirst":true,"extDirPath":inbox\}

POST aif/rest/V1/jobs {Payload}

GET /interop/rest/{api_version}/applicationsnapshots/{applicationSnapshotName}/contents

My code language of choice for today is Powershell – I’m still a bit rusty with Groovy, and Powershell has a very useful set of REST functions built in.

Define the headRESTS

# Parse the config file

$Config = "C:\Git\PBCS_Overnight\Rebuild\" + $Env + "_Config.xml" "

[xml]$ConfigFile = Get-Content $Config

# Import configuration settings

$Username = $ConfigFile.Settings.ClientSettings.Username

$Password = $ConfigFile.Settings.ClientSettings.Password

$Env = $ConfigFile.Settings.ClientSettings.Env

$Domain = $ConfigFile.Settings.ClientSettings.Domain

$URL = $ConfigFile.Settings.ClientSettings.URL

$LogFolder = $ConfigFile.Settings.ScriptSettings.LogFolder

#Params

$Env = "Test"

$FiletoLoad = "C:\Git\PBCS_Overnight\Rebuild\BS\BS_Actual_Jan-13.txt"

$Rule = "BS_ASO"

#REST API URLS

$RESTLCM = "interop/rest/11.1.2.3.600/applicationsnapshots/"

$RESTData = "aif/rest/V1/jobs"

#Encode the authorisation

$EncodedAuthorisation = [System.Text.Encoding]::UTF8.GetBytes($Domain + "." + $Username + ':' + $password)

$EncodedPassword = [System.Convert]::ToBase64String($EncodedAuthorisation)

$headers = @{"Authorization"="Basic $($EncodedPassword)"}

So the first tasks are to set variables, pass through some parameters and define the authorisation headers. I’m going to use the very elegant get-content function to pull all my variables from an XML file (read more about that here). In addition, I’m defining the specific REST API URLs for both the LCM and Data load functions.

We also need to create an encoded header for authentication – the format of which is as follows:

Domain.Username:Password

This then needs to be encoded into UTF8 and THEN converted into a base64string and added to a JSON header.

#Encode the authorisation

$EncodedAuthorisation = [System.Text.Encoding]::UTF8.GetBytes($Domain + "." + $Username + ':' + $password)

$EncodedPassword = [System.Convert]::ToBase64String($EncodedAuthorisation)

$headers = @{"Authorization"="Basic $($EncodedPassword)"}

Write-host $EncodedPassword

YTQ4NTk5Ny5wZXRlci5uaXRzY2hrZUBtcG93ZXJzb2x1dGlvbnMuY29tLmF1OkFTRDMzd2Fz

Basically leaving you with a header record as follows that gets passed through to every REST query.

{

"Authorization"="Basic (YTQ4NTk5Ny5wZXRlci5uaXRzY2hrZUBtcG93ZXJzb2x1dGlvbnMuY29tLmF1OkFTRDMzd2Fz)"

}

RESTringing

#Parse the Folder Path $LoadFile = Split-Path -Path $FiletoLoad -leaf -Resolve $LoadPath = Split-Path -Path $FiletoLoad $LoadParent = Split-Path (Split-Path $FiletoLoad -Parent) -Leaf #Parse the filename $inboxfolder = "inbox\" + $LoadParent + "\" $LoadPeriod = ([io.fileinfo]"$LoadFile").basename.Substring(([io.fileinfo]"$LoadFile").basename.get_Length()-6)

We can then split up the folder and file names to generate all of the required parameters. This is where using a ‘real’ code language is incredibly helpful – giving you a set of string and file system parsing parameters rather than needing to roll your own.

Below is my nice final set of variables.

Write-host $LoadFile write-host $loadpath write-host $loadparent write-host $inboxfolder write-host $loadperiod BS_Actual_Jan-13.txt C:\Git\PBCS_Overnight\Rebuild\BS BS inbox\BS\ Jan-13

One item that will become important later. I’m using a windows box, and therefore all of the current file pathing has “\” slashes for deliminators.

RESTage the data

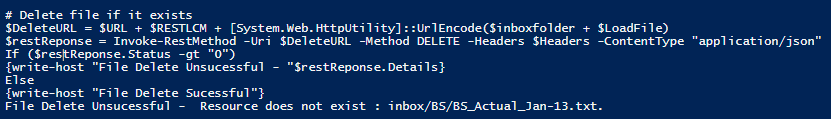

# Delete file if it exists

$DeleteURL = $URL + $RESTLCM + [System.Web.HttpUtility]::UrlEncode($inboxfolder + $LoadFile)

$restReponse = Invoke-RestMethod -Uri $DeleteURL -Method DELETE -Headers $Headers -ContentType "application/json"

If ($restReponse.Status -gt "0")

{write-host "File Delete Unsuccessful - "$restReponse.Details}

Else

{write-host "File Delete Successful"}

So we finally get to our first REST command – the delete function.

For this command we need to URL encode the file path of the target to pass it through

Basically that converts the following path:

inbox/BS/BS_Actual_Jan-13.txt

into

inbox%5cBS%5cBS_Actual_Jan-13.txt

We then send the REST Delete command with the authentication header and the URL encoded filename. We also set the content type:

-contenttype "application/json"

in order to get the query return information in a JSON format.

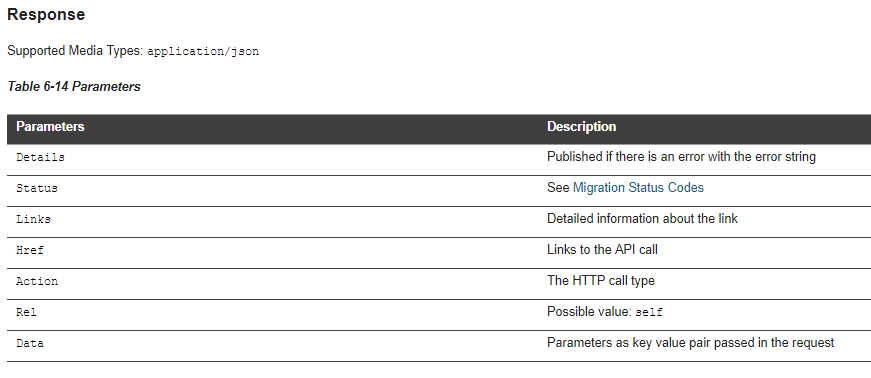

This is where we start to see the value of using the REST API for queries. If a REST query fails, we will get a set of return information – in the case of the Delete function, we get the following return dataset in a JSON format.

Which we can easily parse into an actual error handle – Note: I got this simply by running the delete function twice

Much better than EPMAutomate and a simple ErrorLevel!

PRESTaging Data

# LCM Rest URL (for uploading files)

$inboxfolder = $inboxfolder.Replace("\","/") # The insanity as to why I have to do this....

$UploadURL = $URL + $RESTLCM + $LoadFile + "/contents?q={""isLast"":true,""chunkSize"":500,""isFirst"":true,""extDirPath"":""$inboxfolder""}"

#Upload File

$restReponse = Invoke-RestMethod -Uri $UploadURL -Method Post -Headers $Headers -Infile ($LoadPath + "\" + $LoadFile) -ContentType "application/octet-stream"

If ($restReponse.Status -gt "0")

{write-host "File Upload Unsuccessful - "$restReponse.Details}

Else

{write-host "File Upload Successful"}

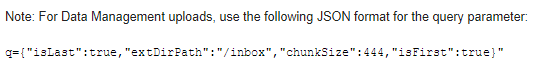

Now we deal with the upload, and this is where the ‘/’ deliminators get a little funky.

My URL is basically the REST API plus a filename, but I have to now define an extDirPath to load a file into the inbox directories for data management. Unfortunately this needs to be parsed as a Unix file path, therefore the slashes must be reversed

$inboxfolder = $inboxfolder.Replace("\","/") # The insanity as to why I have to do this....

In another ‘learn from my mistakes and frustrations’ – the documentation indicates that the folder structure is “extDirPath”:”/inbox”

However this is incorrect – you don’t need a leading ‘/’ before inbox. This is the correct final syntax:

/interop/rest/11.1.2.3.600/applicationsnapshots/BS_Actual_Jan-13.txt/contents?q={"isLast":true,"chunkSize":500,"isFirst":true,"extDirPath":"inbox/BS/"}

Finally, the -Infile command (ie: the source file) accepts the WINDOWS pathing. So the string is the full path using ‘\’ slashes.

-Infile C:\Git\PBCS_Overnight\Rebuild\BS\BS_Actual_Jan-13.txt

RESTituting Data

#Build a data load payload (JSON)

$Payload = "{

""jobType"": ""DATARULE"",

""jobName"": ""$Rule"",

""startPeriod"": ""$LoadPeriod"",

""endPeriod"": ""$LoadPeriod"",

""importMode"": ""REPLACE"",

""exportMode"": ""STORE_DATA"",

""filename"":""$($inboxfolder + $LoadFile)""

}"

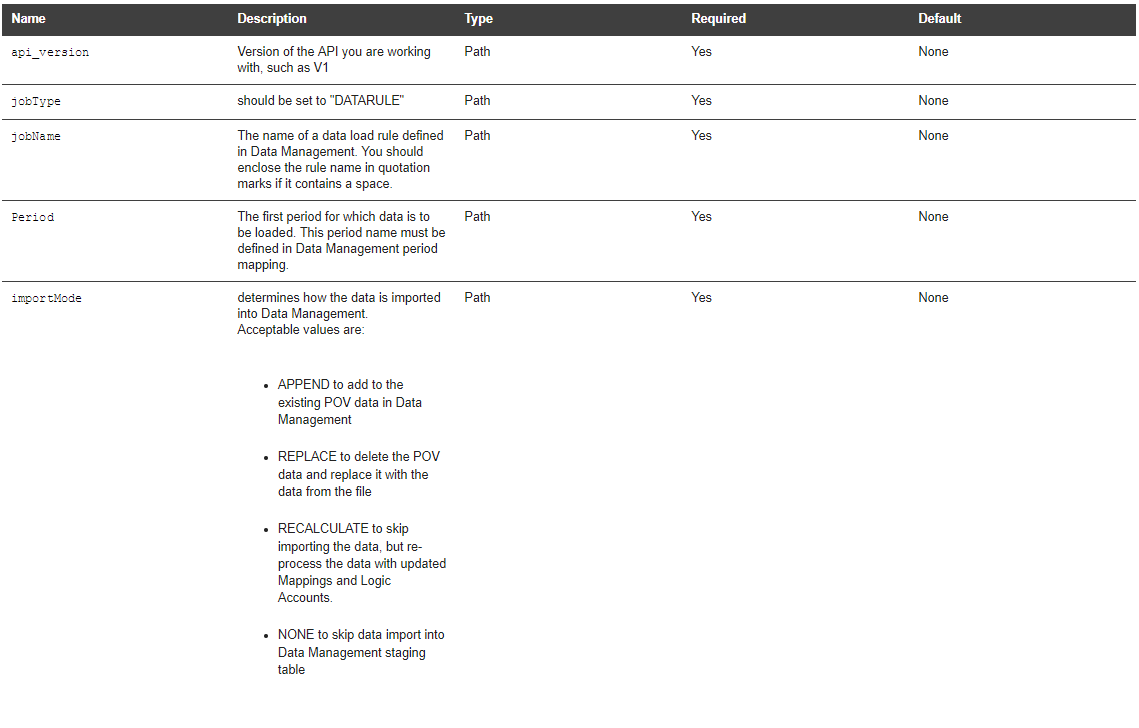

Now onto the data load – the first step is defining a REST body payload – this defines the load periods, job name, import and export modes as well as the (optional) filename.

Once again, this is where the documentation differs from the reality.

Note only a single Period is listed? Yeah. Anyways, through trial and error – mostly by looking at what the EPMAutomate commands were using – I tested startperiod and endperiod and it worked.

Obviously the moment I worked it out I found John Goodwin’s post on exactly the same topic…sigh.

Also note that the Inbox folder path needs to be the Unix format so:

"filename": "inbox/BS/BS_Actual_Jan-13.txt"

Moving on.

#Data Load Rest URL $DataLoadURL = $URL + $RESTData # invoke Request to trigger data load $restReponse = Invoke-RestMethod -Uri $DataLoadURL -Method Post -Body $Payload -Headers $Headers -ContentType "application/json" $responseURL = $restReponse.links[0].href

So here we get to the final load commands. The data load trigger is a POST command, passing through the Payload in the body, and the standard headers.

Remember that the API URLs are different between the LCM (data import\export) and the Data Management URLs. Specifically, the data management URL is

aif/rest/V1/jobs

The second command

$responseURL = $restReponse.links[0].href

is defining the rest response URL for this specific load – once again, very useful because we can poll this using a REST GET to check the status of the load.

$Jobcomplete = $false

while(-not $Jobcomplete)

{

#Call the status of the job

$Checkrunning = Invoke-RestMethod -Uri $responseURL -Method Get -Headers $Headers -ContentType "application/json"

#check if the status is still running

if ($Checkrunning.Jobstatus -eq "RUNNING")

{

#wait for 5 secs

Start-sleep -seconds 5

}

Else

{

#if the load is a failure, download the log

if($Checkrunning.Jobstatus -eq "FAILED")

{

$Logname = [System.Web.HttpUtility]::UrlEncode($restReponse.LogfileName.Replace("/","\")) <# The definition of insanity.... #>

$DownloadURL = $URL + $RESTLCM + $Logname + "/contents"

Invoke-RestMethod -Uri $DownloadURL -Method Get -Headers $Headers -ContentType "application/json" | Out-File -FilePath $($LogFolder + "\" + $Rulename + "_" + $LoadPeriod + ".txt") -Force

write-host "Job Failed - Log Downloaded -" $($LogFolder + "\" + $Rulename + "_" + $LoadPeriod + ".txt")

}

Else

{

#job completed sucessfully

write-host "Job completed Sucessfully - " $Checkrunning.jobStatus "Executed by " $Checkrunning.executedBy

}

$Jobcomplete = $true

}

}

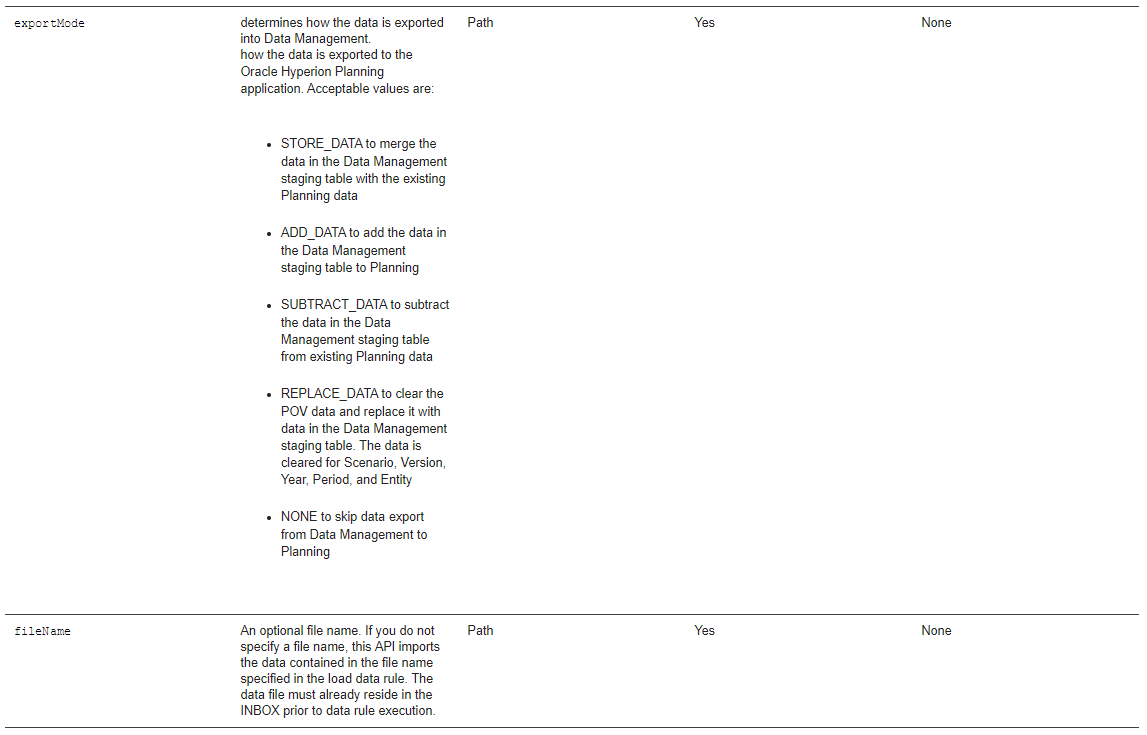

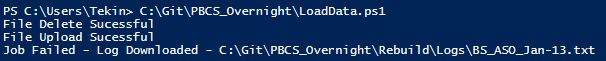

The final component of the script – and this is where using the REST API shines. By polling the job status we can check for a failed run – and then, because one of the return codes is the logfile name (see $restReponse.LogfileName) we can then selectively download that specific log file.

Note once again we have to deal with some slight insansity – the filename of the log is a returned as a windows format (‘\’ slashes) whereas to download the file we need to convert it BACK to a unix format. We then use a fairly standard GET command and write the output to a text file.

ARESTted Development

So there you have it, a full walkthrough of uploading a datafile and triggering a load rule using the REST API.

I’ll put the full code at the bottom – mostly to help anyone deal with the insanity of needing to chop and change the folder paths. Standard caveats apply, don’t run code you don’t understand in your production instances (or any instances!) and no warranties implied or otherwise.

I’ll be noting some of the updates\issues in the documentation and sending something through to Oracle – would make a lot of this development significantly easier!

Next I’ll be extending this process a bit further – actually parsing the downloaded log into actionable errors!

Any questions\issues, leave a comment. Happy RESTing!

Cheers

Pete

<#

.SYNOPSIS

This script loads in data through the Rest API and downloads the log if there are errors

.DESCRIPTION

This script requires both a target environment, and accepts a switch for a file or folder

.EXAMPLE

./LoadData.ps1 -Env Prod -Rule BS_ASO -Folder c:\Test\BS

.NOTES

Change Control

When Who What

13/15/2017 PN Created

.LINK

#>

<#

Param (

[Parameter(Mandatory=$True)]

[String]$Env = $(throw "Target Environment is mandatory"),

[Parameter(Mandatory=$True)]

[String]$Rule = $(throw "Rule name is mandatory"),

[Parameter(Mandatory=$True)]

[String]$FiletoLoad = $(throw "File name is mandatory")

)

#>

#Params

$Env = "Test"

$FiletoLoad = "C:\Git\PBCS_Overnight\Rebuild\BS\BS_Actual_Feb-13.txt"

$Rule = "BS_ASO"

# Parse the config file

$Config = "C:\Git\PBCS_Overnight\Rebuild\" + $Env + "_Config.xml"

[xml]$ConfigFile = Get-Content $Config

# Import configuration settings

$Username = $ConfigFile.Settings.ClientSettings.Username

$Password = $ConfigFile.Settings.ClientSettings.Password

$Env = $ConfigFile.Settings.ClientSettings.Env

$Domain = $ConfigFile.Settings.ClientSettings.Domain

$URL = $ConfigFile.Settings.ClientSettings.URL

$LogFolder = $ConfigFile.Settings.ScriptSettings.LogFolder

#Encode the authorisation

$EncodedAuthorisation = [System.Text.Encoding]::UTF8.GetBytes($Domain + "." + $Username + ':' + $password)

$EncodedPassword = [System.Convert]::ToBase64String($EncodedAuthorisation)

$headers = @{"Authorization"="Basic $($EncodedPassword)"}

#Parse the Folder Path

$LoadFile = Split-Path -Path $FiletoLoad -leaf -Resolve

$LoadPath = Split-Path -Path $FiletoLoad

$LoadParent = Split-Path (Split-Path $FiletoLoad -Parent) -Leaf

#Parse the filename

$inboxfolder = "inbox\" + $LoadParent + "\"

$LoadPeriod = ([io.fileinfo]"$LoadFile").basename.Substring(([io.fileinfo]"$LoadFile").basename.get_Length()-6)

#REST API URLS

$RESTLCM = "interop/rest/11.1.2.3.600/applicationsnapshots/"

$RESTData = "aif/rest/V1/jobs"

# Delete file if it exists

$DeleteURL = $URL + $RESTLCM + [System.Web.HttpUtility]::UrlEncode($inboxfolder + $LoadFile)

write-host $DeleteURL

$restReponse = Invoke-RestMethod -Uri $DeleteURL -Method DELETE -Headers $Headers -ContentType "application/json"

If ($restReponse.Status -gt "0")

{write-host "File Delete Unsucessful - "$restReponse.Details}

Else

{write-host "File Delete Sucessful"}

# LCM Rest URL (for uploading files)

$inboxfolder = $inboxfolder.Replace("\","/") # The insanity as to why I have to do this....

$UploadURL = $URL + $RESTLCM + $LoadFile + "/contents?q={""isLast"":true,""chunkSize"":500,""isFirst"":true,""extDirPath"":""$inboxfolder""}"

#Upload File

$restReponse = Invoke-RestMethod -Uri $UploadURL -Method Post -Headers $Headers -Infile ($LoadPath + "\" + $LoadFile) -ContentType "application/octet-stream"

If ($restReponse.Status -gt "0")

{write-host "File Upload Unsucessful - "$restReponse.Details}

Else

{write-host "File Upload Sucessful"}

#Build a data load payload (JSON)

$Payload = "{

""jobType"": ""DATARULE"",

""jobName"": ""$Rule"",

""startPeriod"": ""$LoadPeriod"",

""endPeriod"": ""$LoadPeriod"",

""importMode"": ""REPLACE"",

""exportMode"": ""STORE_DATA"",

""filename"":""$($inboxfolder + $LoadFile)""

}"

#Data Load Rest URL

$DataLoadURL = $URL + $RESTData

# invoke Request to trigger data load

$restReponse = Invoke-RestMethod -Uri $DataLoadURL -Method Post -Body $Payload -Headers $Headers -ContentType "application/json"

$responseURL = $restReponse.links[0].href

$Jobcomplete = $false

while(-not $Jobcomplete)

{

#Call the status of the job

$Checkrunning = Invoke-RestMethod -Uri $responseURL -Method Get -Headers $Headers -ContentType "application/json"

#check if the status is still running

if ($Checkrunning.Jobstatus -eq "RUNNING")

{

#wait for 5 secs

Start-sleep -seconds 5

}

Else

{

#if the load is a failure, download the log

if($Checkrunning.Jobstatus -eq "FAILED")

{

$Logname = [System.Web.HttpUtility]::UrlEncode($restReponse.LogfileName.Replace("/","\")) # The definition of insanity....

$DownloadURL = $URL + $RESTLCM + $Logname + "/contents"

Invoke-RestMethod -Uri $DownloadURL -Method Get -Headers $Headers -ContentType "application/json" | Out-File -FilePath $($LogFolder + "\" + $Rulename + "_" + $LoadPeriod + ".txt") -Force

write-host "Job Failed - Log Downloaded -" $($LogFolder + "\" + $Rulename + "_" + $LoadPeriod + ".txt")

}

Else

{

#job completed sucessfully

write-host "Job completed Sucessfully - " $Checkrunning.jobStatus "Executed by " $Checkrunning.executedBy

}

$Jobcomplete = $true

}

}

2 thoughts on “RESTing Around with PBCS”

Comments are closed.