RESTing around with PBCS 2 – Still RESTless

G’day All,

Two posts in two weeks!

So, this is really a continuation of the previous one. Just as a recap:

- the REST API is awesome

- It lets us do all of the same functions as EPMAutomate, but with significantly better error handling and logging abilities

- It also has a few ‘new’ tricks up it’s sleeve

- I make terrible REST puns.

So – RESTating the Obvious

Where I’d left off, we’d written a powershell script to delete the existing load file, upload a new load file, run a data load run and IF it errors download the log.

Great for one file, but what if we have a bit of data…what if we have a folder?

Well – we’re in Powershell country now!

# Define the folder items as an array

$files = Get-ChildItem $Folder

# Now for each file in the file array

Foreach ($file in $files){

#Parse the filename

$LoadFile = Split-Path -Path $file.fullname -leaf -Resolve

$LoadPath = Split-Path -Path $file.fullname

$LoadParent = Split-Path (Split-Path $file.fullname -Parent) -Leaf

$inboxfolder = "inbox\" + $LoadParent + "\"

$LoadPeriod = ([io.fileinfo]"$LoadFile").basename.Substring(([io.fileinfo]"$LoadFile").basename.get_Length()-6)

<# insert rest of code #>

}

We can just define a set of files (as defined by a folder parameter) and then iterate through through them one by one.

Express your DisinteREST

That’s great, but…two additional lines of code does not a blog post make.

How about we actually go and ‘do’ something with the log files that we’ve carefully curated.

How about we try and work out ‘what’ the errors are and attempt to do something about them.

Okay – so this is how we got the files last time:

$Logfilename = $LogFolder + "\" + $Rule + "_" + $LoadPeriod + ".txt"

$Logname = [System.Web.HttpUtility]::UrlEncode($restReponse.LogfileName.Replace("/","\")) # The definition of insanity....

$DownloadURL = $URL + $RESTLCM + $Logname + "/contents"

Invoke-RestMethod -Uri $DownloadURL -Method Get -Headers $Headers -ContentType "application/json" | Out-File -FilePath $Logfilename -Force

write-host "Job Failed - Log Downloaded -" $Logfilename "

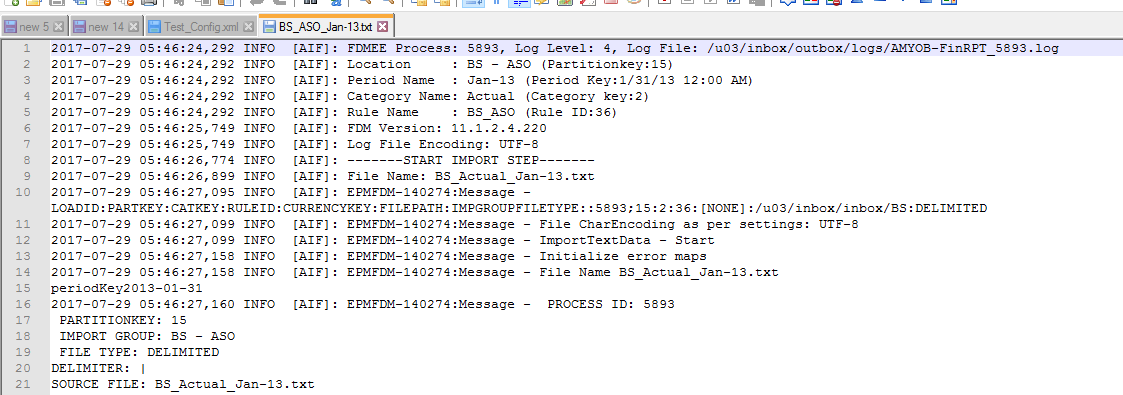

And this is what that log file looks like – remember we’re only getting the logs for the failed loads.

SquaREST peg in a Round Hole

So let’s talk about the FDMEE\Data Management log for a moment.

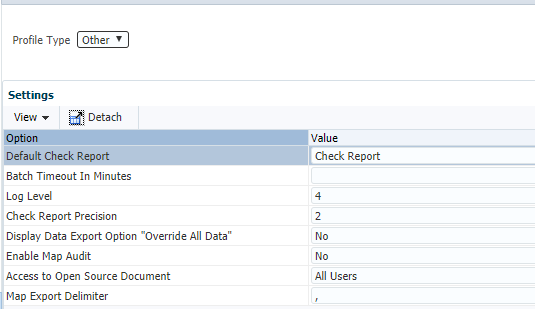

In many ways, this is literally the best log of…well, anything…that you can currently get out of PBCS. No access to the Essbase calc log, no access to the FR logs, no access to the MDXQuery logs.

The internal Calc Manager log is…not great, although it is getting better.

So this is literally a replica of the FDMEE log, with options to set the log level and capture all the detail.

But, it’s still a bit all over the place. The actual reporting of errors is pretty good, but we’re definitely going to need some parsing to get anything useful out of it.

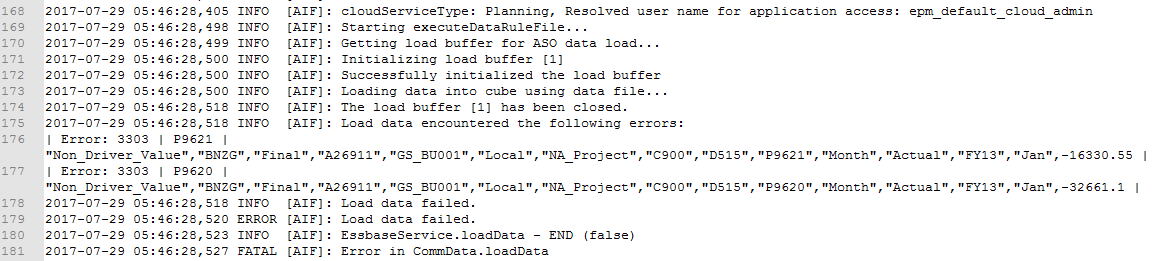

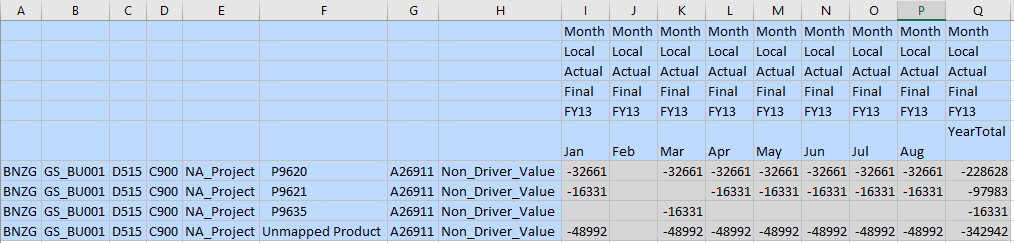

So looking through it, the errors we’re looking for are here:

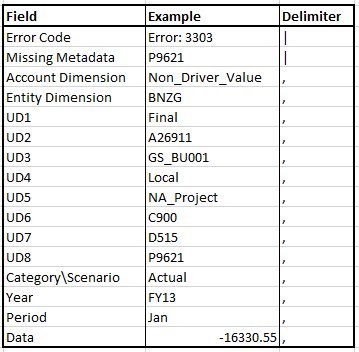

This is the standard error code for an essbase (ASO) data load with missing metadata.

| Error: 3303 | P9621 | "Non_Driver_Value","BNZG","Final","A26911","GS_BU001","Local","NA_Project","C900","D515","P9621","Month","Actual","FY13","Jan",-16330.55 | | Error: 3303 | P9620 | "Non_Driver_Value","BNZG","Final","A26911","GS_BU001","Local","NA_Project","C900","D515","P9620","Month","Actual","FY13","Jan",-32661.1 |

A pipe and comma delimited error handle broken down by line with the following fields:

Note: If anyone can think of a method of pulling that order out dynamically from anywhere else (specifically the UD1+ order), please let me know!

The FaiREST Parser

So if we grab the second field, we’ve got the specific missing members that failed. That’s fairly easy to parse in Powershell

# Okay - this is where we get fun...

$AllMissingMembers = New-Object System.Collections.Generic.List[System.Object] #create a list for the missing members

Get-Content $Logfilename | # open the file

Select-string "Error: 3303" | # find any Error 3303 strings (Missing members)

foreach{ #for each error row

$data = $_.line.split("|",[System.StringSplitOptions]::RemoveEmptyEntries).Trim(); # split them into members and data rows

$AllMissingMembers.add($data[1]) #build an list of missing members

}

$UniqueMembers = $AllMissingMembers | Select -unique # select the unique items

Without wanting to write a discourse on powershell, the functions are as follows:

- Create a generic list object

- Open the file (get-content is a little slow with bigger files, but these are pretty small)

- Grab all of the rows that start with “Error:3303”

- For each of those rows, split up the rows using the “|” delimiter

- Grab the second item in the row (arrays start at zero!) and add it to the list

- Once you’ve got all the members, create a unique list

Wham Bam – we have a unique list of members in a powershell list.

Now, to be honest we could stop here. It’s pretty easy in Powershell to just write that member list into an email – attach the data lines to give it context – and send it to the administrator.

But, 8 lines of code does still not a good blog post make. We’ve got the REST API – let’s try to get fancy.

RESTitute the Members

So one of the recent new features that I have a love and hate relationship with is dynamic member creation aka: members on the fly.

Conceptually it’s great. Useful for new projects, new employees, new assets etc. Gives the users the ability to dynamically add metadata and can be fully scripted in with @RETURN controls and validation.

It’s the biggest downside? It doesn’t work in Smartview – and in reality, that’s such a gamebreaker that I’ve gone off it completely in PBCS, or alternatively on premise ‘created’ my own with the groovy CDF.

But – this process isn’t user facing – so let’s see what we can do with it!

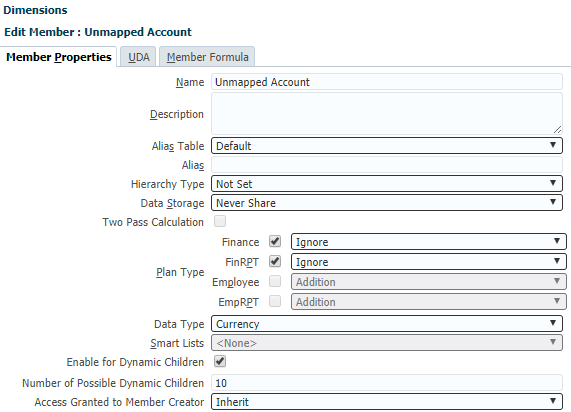

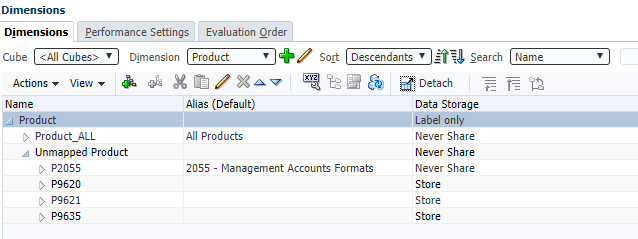

Let’s create a couple of “Unmapped” nodes in our dimensions

Obviously you’d want to select only as many members as you’re likely to need per load file – and remember these get created in the essbase cube, so don’t add 300 additional hidden members in your dense dimension!

Once that’s done and refreshed into essbase – we can call the REST command to add members to those nodes.

For this we need to connect to the Planning API:

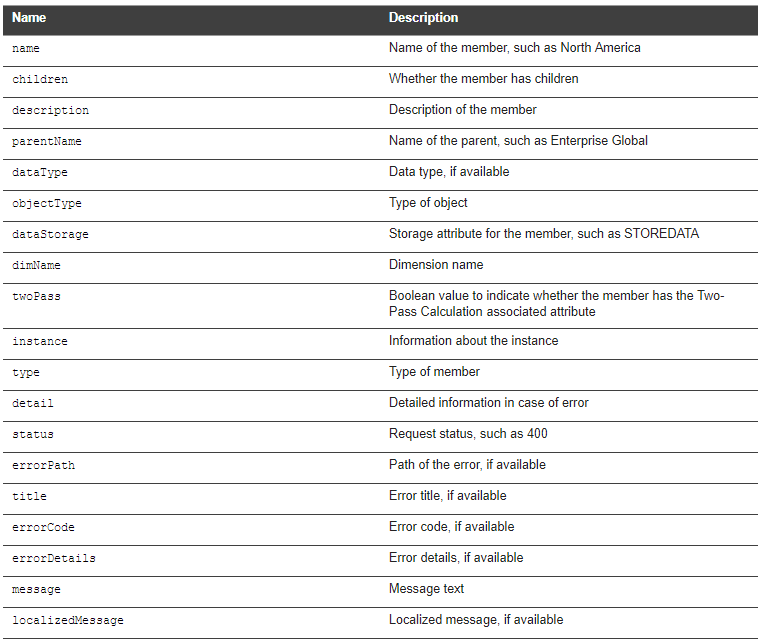

REST Resource - Add Member

POST /HyperionPlanning/rest/{api_version}/applications/{application}/dimensions/{dimname}/members

Similar to the dataload process, we’ll be creating a payload to post through the body of the REST POST query.

Similar, also to the dataload process, the documentation is slightly incorrect.

Instead of Name the member must be listed as memberName.

foreach($UniqueMember in $UniqueMembers) #Now we have a unique list of members, lets iterate and add them!

{

$CreateMbrURL = $URL + $RESTPlanning + "/dimensions/Product/members" # Create the Rest Add Member URL

# Generate the Add Member payload

$AddMemberPayload = "{

""memberName"": ""$UniqueMember"",

""parentName"": ""Unmapped Product""

}"

#Create the member

$restReponse = Invoke-RestMethod -Uri $CreateMbrURL -Method Post -Body $AddMemberPayload -Headers $Headers -ContentType "application/json"

If ($restReponse.Status -gt "0")

{write-host "Member Creation Unsuccessful - " + $restReponse.Details}

Else

{write-host $UniqueMember "- Member created Sucessfully"

}

Obviously I’m being lazy and not generating the ParentName dynamically – up to you as to how you achieve this, but assuming your dimension members are all prefixed with the same letter it should be reasonably easy. You could also create multiple dynamic child enabled members within a single hierarchy – might be useful to map unmapped Balance Sheet members into one node and unmapped P&L members to another.

Importantly, the new member seems to inherit the data storage operator from it’s parent member. Which is useful, because every possible permutation of the actual documentation that I tried didn’t work.

"dataStorage": "STOREDATA" #what the documentation says "dataStorage": "Store Data" #what the response body is "DataStorage": "StoreData" #removing spaces "DataStorage(FINRPT)": "Store Data" #Similar to the planning header

This was made even more frustrating because every failed attempt doesn’t return an error message, but instead a full 500: HTML Error. So, once again, if anyone knows the correct syntax, please let me know?

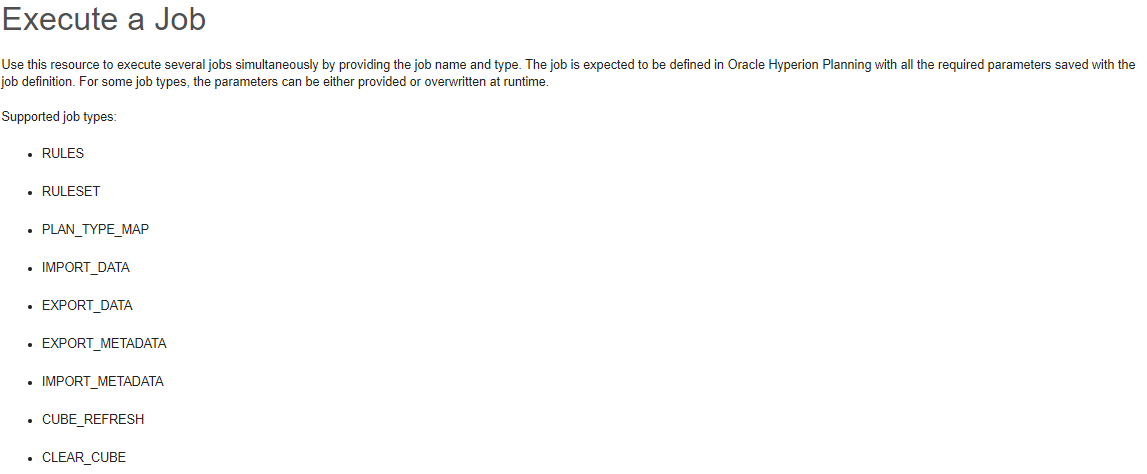

RESTructuring the cube

So now we’ve dynamically added the members that are missing, we need to refresh the essbase layer. In ‘theory’ (though I didn’t test this) – if you were loading through the Planning connection, you wouldn’t even need to do this step! But because we’re loading into the ASO cube, let’s refresh the cube.

This also opens up another REST API command: executing a job.

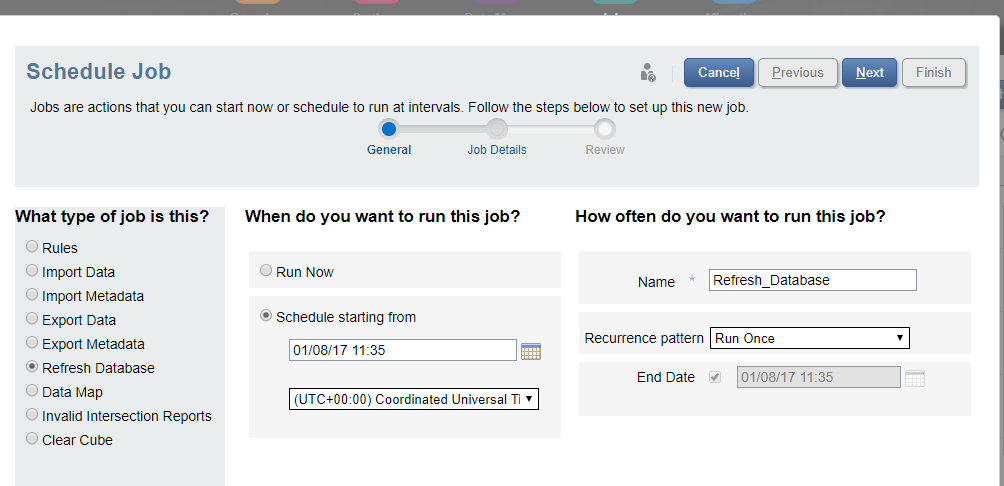

Nice and simple – first you create a job within PBCS and then you call it. Since Cameron Lackpour has already enthused the world with multiple blog posts on the topic I won’t belabour the point.

Basically create a refresh cube job and give it a name you remember.

Once that’s done, we call it very similarly to any other planning command, setup a quick payload and run it.

#Now all the metadata has been added, trigger a database refresh

$RefreshURL = $URL + $RESTPlanning + "/jobs" # Create the Rest refresh URL

# Generate the refresh cube payload

$RefreshPayload = "{

""jobType"": ""CUBE_REFRESH"",

""jobName"": ""Refresh_with_Kill""

}"

$restReponse = Invoke-RestMethod -Uri $RefreshURL -Method Post -Body $RefreshPayload -Headers $Headers -ContentType "application/json"

$RefreshURLResponse = $RefreshURL + "/" + $restReponse.jobID

$RefreshComplete = $false

while(-not $RefreshComplete)

{

#Call the status of the job

$Checkrunning = Invoke-RestMethod -Uri $RefreshURLResponse -Method Get -Headers $Headers -ContentType "application/json"

#check if the status is still running

if ($Checkrunning.status -eq "-1")

{

#wait for 5 secs

Start-sleep -seconds 5

}

Else

{

#if the refresh is a sucesss,

if($Checkrunning.status -eq "0")

{

#Job was sucessful

write-host "Refresh Sucessful - " $Checkrunning.details

}

Else

{

#job failed

write-host "Refresh Failed - " $Checkrunning.details

}

$RefreshComplete = $true

}

}

Once again – we have to roll our own process to check when the job is complete. Calling the REST API job by itself will simply run the job, but for this step we need to wait for it to complete . Perhaps surprisingly (or perhaps not), this is different to the loaddata API calls.

$RefreshURLResponse = $RefreshURL + "/" + $restReponse.jobID $Checkrunning = Invoke-RestMethod -Uri $RefreshURLResponse -Method Get -Headers $Headers -ContentType "application/json"

From the original POST REST command we get the JobID, and then we run a GET command on that Job ID and we get the status. Why Oracle decided to change between the definition of Status and Details between the Data Management API and the Planning API is a question best left for the Oracles.

RESTuffing the Turkey

(The puns are really getting tortured. Sorry)

Final Step? Reload the data of course!

# invoke Request to Reload the data

$restReponse = Invoke-RestMethod -Uri $DataLoadURL -Method Post -Body $Payload -Headers $Headers -ContentType "application/json"

$responseURL = $restReponse.links[0].href

$ReloadComplete = $false

while(-not $ReloadComplete)

{

#Call the status of the job

$ReloadRunning = Invoke-RestMethod -Uri $responseURL -Method Get -Headers $Headers -ContentType "application/json"

#check if the status is still running

if ($ReloadRunning.Jobstatus -eq "RUNNING")

{

#wait for 5 secs

Start-sleep -seconds 5

}

Else

{

#If the load is a sucess, confirm

if($ReloadRunning.Jobstatus -eq "SUCCESS")

{

#job completed

write-host "Data Reload Sucessful - " $ReloadRunning.jobStatus "Executed by " $ReloadRunning.executedBy

}

Else

#It may have failed for another reason (not metadata) - post details

{

#job failed

write-host "Data Reload Failed - " $ReloadRunning.details

}

$ReloadComplete = $true

}

}

This is simply a repeat of the original data load commands. Only item of interest is that I’m using different variables ($ReloadComplete) as strictly speaking I’m still within the error handle of the original data load process.

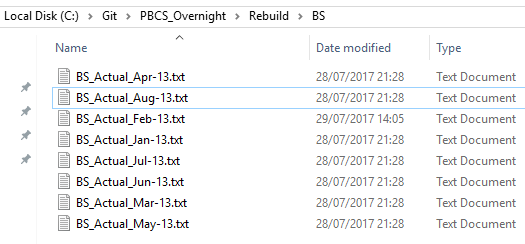

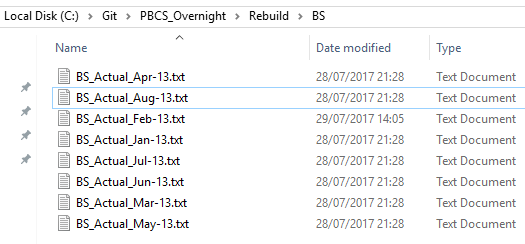

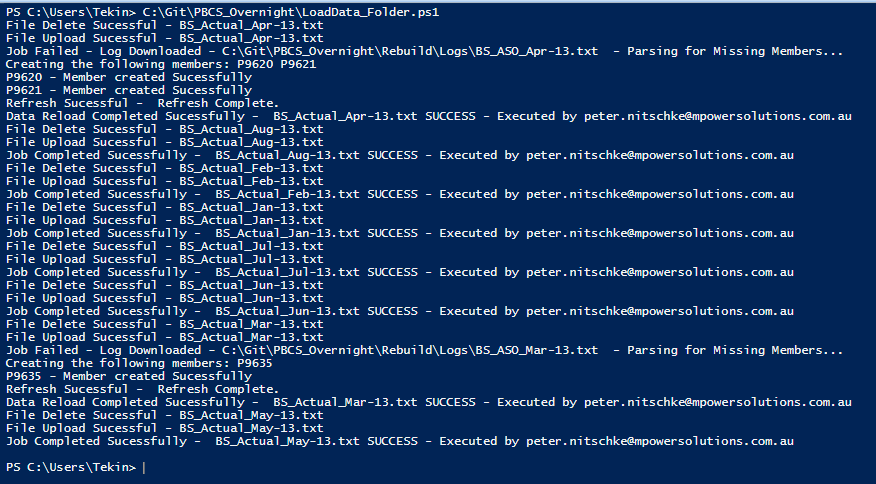

So. What does this actually look like over a folder? Specifically this folder?

Well – to be honest, it looks pretty damn amazing.

Dynamically creating metadata, refreshing the cube as it goes and reloading only the impacted data files.

Going TerRESTrial

Once completed, it’s Child’s Play…I mean Childz play…I mean Child’s Play to add the new members to an email to the Admin\Business owner to move them to their correct home.

So there you have it, a fully automated, metadata creating, folder loading, fully logging, back to base emailing, data load process that doesn’t require EPMAutomate to run.

Not too bad for less than 250 lines of code!

As always, I’m deeply indebted to previous works from Jake Turrell and John Goodwin – without whom I would have undoubtedly given up.

Full code line available below. As before don’t run code against a production environment that you don’t understand, and make sure it’s doing exactly what you want before you do!

Cheers

Pete

<#

.SYNOPSIS

This script loads in data through the Rest API and downloads the log if there are errors

.DESCRIPTION

This script requires both a target environment, and accepts a switch for a file or folder

.EXAMPLE

./LoadData.ps1 -Env Prod -Rule BS_ASO -Folder c:\Test\BS

.NOTES

Change Control

When Who What

13/15/2017 PN Created

.LINK

#>

<#Param (

[Parameter(Mandatory=$True)]

[String]$Env = $(throw "Target Environment is mandatory"),

[Parameter(Mandatory=$True)]

[String]$Rule = $(throw "Rule name is mandatory"),

[Parameter(Mandatory=$True)]

[String]$Folder = $(throw "Folder name is mandatory")

)#>

# Parse the config file

$Config = "C:\Git\PBCS_Overnight\Rebuild\" + $Env + "_Config.xml"

[xml]$ConfigFile = Get-Content $Config

# Import configuration settings

$Username = $ConfigFile.Settings.ClientSettings.Username

$Password = $ConfigFile.Settings.ClientSettings.Password

$Env = $ConfigFile.Settings.ClientSettings.Env

$Domain = $ConfigFile.Settings.ClientSettings.Domain

$URL = $ConfigFile.Settings.ClientSettings.URL

$AppName = $ConfigFile.Settings.ClientSettings.AppName

$LogFolder = $ConfigFile.Settings.ScriptSettings.LogFolder

#Encode the authorisation

$EncodedAuthorisation = [System.Text.Encoding]::UTF8.GetBytes($Domain + "." + $Username + ':' + $password)

$EncodedPassword = [System.Convert]::ToBase64String($EncodedAuthorisation)

$headers = @{"Authorization"="Basic $($EncodedPassword)"}

#REST API URLS

$RESTLCM = "interop/rest/11.1.2.3.600/applicationsnapshots/"

$RESTData = "aif/rest/V1/jobs"

$RESTPlanning = "HyperionPlanning/rest/v3/applications/$AppName"

# Define the folder items as an array

$files = Get-ChildItem $Folder

# Now for each file in the file array

Foreach ($file in $files){

#Parse the filename

$LoadFile = Split-Path -Path $file.fullname -leaf -Resolve

$LoadPath = Split-Path -Path $file.fullname

$LoadParent = Split-Path (Split-Path $file.fullname -Parent) -Leaf

$inboxfolder = "inbox\" + $LoadParent + "\"

$LoadPeriod = ([io.fileinfo]"$LoadFile").basename.Substring(([io.fileinfo]"$LoadFile").basename.get_Length()-6)

# Delete file if it exists

$DeleteURL = $URL + $RESTLCM + [System.Web.HttpUtility]::UrlEncode($inboxfolder + $LoadFile)

$restReponse = Invoke-RestMethod -Uri $DeleteURL -Method DELETE -Headers $Headers -ContentType "application/json"

If ($restReponse.Status -gt "0")

{write-host "File Delete Unsucessful - "$restReponse.Details}

Else

{write-host "File Delete Sucessful -" $LoadFile}

# LCM Rest URL (for uploading files)

$inboxfolder = $inboxfolder.Replace("\","/") # The insanity as to why I have to do this....

$UploadURL = $URL + $RESTLCM + $LoadFile + "/contents?q={""isLast"":true,""chunkSize"":500,""isFirst"":true,""extDirPath"":""$inboxfolder""}"

#Upload File

$restReponse = Invoke-RestMethod -Uri $UploadURL -Method Post -Headers $Headers -Infile ($LoadPath + "\" + $LoadFile) -ContentType "application/octet-stream"

If ($restReponse.Status -gt "0")

{write-host "File Upload Unsucessful - "$restReponse.Details}

Else

{write-host "File Upload Sucessful -" $LoadFile}

#Build a data load payload (JSON)

$Payload = "{

""jobType"": ""DATARULE"",

""jobName"": ""$Rule"",

""startPeriod"": ""$LoadPeriod"",

""endPeriod"": ""$LoadPeriod"",

""importMode"": ""REPLACE"",

""exportMode"": ""STORE_DATA"",

""filename"":""$($inboxfolder + $LoadFile)""

}"

#Data Load Rest URL

$DataLoadURL = $URL + $RESTData

# invoke Request to trigger data load

$restReponse = Invoke-RestMethod -Uri $DataLoadURL -Method Post -Body $Payload -Headers $Headers -ContentType "application/json"

$responseURL = $restReponse.links[0].href

$Jobcomplete = $false

#Error Handling for data loads

while(-not $Jobcomplete)

{

#Call the status of the job

$Checkrunning = Invoke-RestMethod -Uri $responseURL -Method Get -Headers $Headers -ContentType "application/json"

#check if the status is still running

if ($Checkrunning.Jobstatus -eq "RUNNING")

{

#wait for 5 secs

Start-sleep -seconds 5

}

Else

{

#if the load is a sucess

if($Checkrunning.Jobstatus -eq "SUCCESS")

{

#job completed sucessfully

write-host "Job Completed Sucessfully - " $LoadFile $Checkrunning.jobStatus- "Executed by" $Checkrunning.executedBy

}

Else

{

$Logfilename = $LogFolder + "\" + $Rule + "_" + $LoadPeriod + ".txt"

$Logname = [System.Web.HttpUtility]::UrlEncode($restReponse.LogfileName.Replace("/","\")) # The definition of insanity....

$DownloadURL = $URL + $RESTLCM + $Logname + "/contents"

Invoke-RestMethod -Uri $DownloadURL -Method Get -Headers $Headers -ContentType "application/json" | Out-File -FilePath $Logfilename -Force

write-host "Job Failed - Log Downloaded -" $Logfilename " - Parsing for Missing Members..."

# Okay - this is where we get fun...

$AllMissingMembers = New-Object System.Collections.Generic.List[System.Object] #create a list for the missing members

Get-Content $Logfilename | # open the file

Select-string "Error: 3303" | # find any Error 3303 strings (Missing members)

foreach{ #for each error row

$data = $_.line.split("|",[System.StringSplitOptions]::RemoveEmptyEntries).Trim(); # split them into members and data rows

$AllMissingMembers.add($data[1]) #build an list of missing members

}

$UniqueMembers = $AllMissingMembers | Select -unique # select the unique items

write-host "Creating the following members:" $UniqueMembers

#Now we have a unique list of members, lets iterate and add them!

foreach($UniqueMember in $UniqueMembers)

{

$CreateMbrURL = $URL + $RESTPlanning + "/dimensions/Product/members" # Create the Rest Add Member URL

# Generate the Add Member payload

$AddMemberPayload = "{

""memberName"": ""$UniqueMember"",

""parentName"": ""Unmapped Product""

}"

#Create the member

$restReponse = Invoke-RestMethod -Uri $CreateMbrURL -Method Post -Body $AddMemberPayload -Headers $Headers -ContentType "application/json"

If ($restReponse.Status -gt "0")

{write-host "Member Creation Unsuccessful - " + $restReponse.Details}

Else

{write-host $UniqueMember "- Member created Sucessfully"}

}

#Now all the metadata has been added, trigger a database refresh

$RefreshURL = $URL + $RESTPlanning + "/jobs" # Create the Rest refresh URL

# Generate the refresh cube payload

$RefreshPayload = "{

""jobType"": ""CUBE_REFRESH"",

""jobName"": ""Refresh_with_Kill""

}"

$restReponse = Invoke-RestMethod -Uri $RefreshURL -Method Post -Body $RefreshPayload -Headers $Headers -ContentType "application/json"

$RefreshURLResponse = $RefreshURL + "/" + $restReponse.jobID

$RefreshComplete = $false

while(-not $RefreshComplete)

{

#Call the status of the job

$Checkrunning = Invoke-RestMethod -Uri $RefreshURLResponse -Method Get -Headers $Headers -ContentType "application/json"

#check if the status is still running

if ($Checkrunning.status -eq "-1")

{

#wait for 5 secs

Start-sleep -seconds 5

}

Else

{

#if the refresh is a sucesss,

if($Checkrunning.status -eq "0")

{

#Job was sucessful

write-host "Refresh Sucessful - " $Checkrunning.details

}

Else

{

#job failed

write-host "Refresh Failed - " $Checkrunning.details

}

$RefreshComplete = $true

}

}

# invoke Request to Reload the data

$restReponse = Invoke-RestMethod -Uri $DataLoadURL -Method Post -Body $Payload -Headers $Headers -ContentType "application/json"

$responseURL = $restReponse.links[0].href

$ReloadComplete = $false

while(-not $ReloadComplete)

{

#Call the status of the job

$ReloadRunning = Invoke-RestMethod -Uri $responseURL -Method Get -Headers $Headers -ContentType "application/json"

#check if the status is still running

if ($ReloadRunning.Jobstatus -eq "RUNNING")

{

#wait for 5 secs

Start-sleep -seconds 5

}

Else

{

#If the load is a sucess, confirm

if($ReloadRunning.Jobstatus -eq "SUCCESS")

{

#job completed

write-host "Data Reload Completed Sucessfully - " $LoadFile $ReloadRunning.jobStatus- "Executed by" $ReloadRunning.executedBy

}

Else

#It may have failed for another reason (not metadata) - post details

{

#job failed

write-host "Data Reload Failed -" $LoadFile $ReloadRunning.details

}

$ReloadComplete = $true

}

}

}

$Jobcomplete = $true

}

}

}

Pete, this is absolutely the best REST/PBCS post on the internet, I have read so far!

Brilliant, Thanks.